Dynamcial systems, part 1: Introduction and definitions

This post is the first in a series on dynamical systems aiming towards explaining the contents of my PhD thesis. This first part will cover the basic definitions, objects of interest and important results on dynamical systems in general. The reader will be assumed to be familiar with the basics of calculus, topology and manfolds.

Introduction

Dynamical systems describe how objects and their properties change with respect to time. For example it can describe the motion of a particle under the influence of a force such as gravity, how the concentrations of various substances change during a chemical reaction or how the number of fish in a lake changes from year to year.

Mathematically a dynamical system consists of a space \(M\) called the phase space and a function \(\Phi\), commonly called the evolution function, taking as its arguments a time \(t\) and a point \(x\) of phase space and outputs another point \(y=\Phi(t,x)\) of phase space, the point to which \(x\) has moved after time \(t\). We therefore also require that \(\Phi(0,x)=x\) for every point \(x\), i.e. if no time passes then the points do not move. Although in general the time parameter \(t\) can belong to any given monoid \(\mathcal{T}\) in practice either the real numbers \(\mathbb{R}\) or the integers \(\mathbb{Z}\) are used, possibly restricted to their non-negative parts. Therefore one usually also talks about continuous time dynamical systems and discrete time dynamical systems.

Discrete time dynamical systems are usually modeled simply as a map \(F\colon M\to M\) taking points of phase space to other points of phase space and the time \(t\) gives the number of iterations of this function. If the point \(x\) is mapped to the point \(y\) after time \(t=n\) we write \(y=F^{n}(x)\) where \(F^{n}\) denotes \(n\) iterations of \(F\). In other words, in terms of an evolution function \(\Phi\) we have \(\Phi(n,x)=F^{n}(x)\).

On the other hand, continuous time dynamical systems commonly arise as the flow of a set of differential equations where time is modeled as a subset of the real numbers \(\mathbb{R}\). Typically these differential equations are a set of ordinary differential equations with a finite dimensional phase space but they could also be partial differential equations with an infinite dimensional phase space.

For simplicity we will only be concerned with phase spaces which are finite-dimensional smooth manifolds and discrete time dynamical systems which are diffeomorphisms of their underlying phase space in the remainder of this post. Many concepts and results generalize to other settings but some require technicalities that are not suited for a blog post but better left to a proper textbook on dynamical systems. You can find some suggesitons in the "Further Reading" section below.

Fixed points and other invariant sets

The goal of studying dynamical systems is often not to find a complete description of the orbit of every point, such as finding a closed expression for the solution of a system of ordinary differential equations, since such a description may be impossible to find. Instead one often focuses on finding a more qualitative description of a subset of the orbits. This can be achieved by studying certain special subsets of phase space that limit the behavior of orbits at or around it.

The simplest such subset is the fixed point. A fixed point of a dynamical system \(F\) is a point \(x\in M\) such that \(F(x) = x\), i.e., a point that doesn't move around but stays fixed in time.1 Fixed points are of interest not only because they are the simples example of an invariant set but mainly because it can often be used to limit the behavior of nearby points as well. For example, consider a dynamical system \(F\) with a fixed point \(x_{0}\) such that all eigenvalues of \(D_{x_{0}}F\) are contained inside the unit circle, i.e., have absolute value less than one. There will then be some neighborhood \(U\) of \(x_{0}\) in which the dynamics is well-described by the linear map \(D_{x_{0}}F\) and, as a result, there is some constant \(C < 1\) such that

\[ d(F(x), x_{0})\leq C d(x, x_{0}) < d(x, x_{0}). \]

This means that the point \(x_{0}\) will attract all points in its vicinity. The fixed point is therefore called an attracting fixed point. Conversely, if all eigenvalues of \(D_{x_{0}}F\) ar outside the unit circle the fixed point will instead repel all nearby points and is therefore called a repellng fixed point. Assuming that \(F\) is invertible we have that any attracting fixed point of \(F\) is also a repelling fixed point of \(F^{-1}\) and vice versa.

Slightly more complicated are periodic points. These are points \(x_{0}\) for which \(F^{n}(x_{0}) = x_{0}\), i.e., they are fixed points of some iteration \(F^{n}\) of \(F\). The smallest such \(n\) is called the period of the periodic point. Note that if \(x_{0}\) is a periodic point then so are all points \(F^{i}(x_{0})\) for \(i = 0,1,\ldots,n-1\). Just as for fixed points, the orbit

\[ \mathcal{O}^{F}(x_{0}) = \{F^{i}(x_{0})\colon i\geq 0\} \]

is called attracting respectively repelling if it is attracting respectively repelling as a fixed point of \(F^{n}\).2

In full generality an invariant set is a subset \(U\subset M\) such that for every \(x\in U\) we have \(F^{i}(x)\in U\) for all \(i\geq 0\). An attractor is then an invariant subset \(U\) such that there is a neighborhood \(B(U)\) of \(U\), called the basin of attraction of \(U\), with the property that for each \(b\in B(U)\) and any neighborhood \(V\) of \(U\) there is an \(N\geq 0\) such that \(F^{n}(b)\in V\) for all \(n\geq N\).

So far so good but the eigenvalues of the differential at a fixed point are not always all inside the unit circle or all outside the unit circle. What happens when this condition fails? We will explore the situation when the eigenvalues can be mixed but none of them are on the unit circle. In such cases, which include attracting and repelling fixed points, the fixed point is called hyperbolic. The same terminology also applies to periodic points: a periodic point \(x_{0} = F^{n}(x_{0})\) is called hyperbolic if it is a hyperbolic fixed point of \(F^{n}\).

When we have a hyperbolic fixed point the tangent space at the fixed point is also split into two parts: a stable subspace \(E^{s}\) corresponding to the eigenvalues inside the unit circle and an unstable subspace \(E^{u}\) corresponding to the eigenvalues outside the unit circle. If \(x_{0}\) is a hyperbolic fixed point we can therefore write

\[ T_{x_{0}}M = E_{s}\oplus E_{u}. \]

Furthermore, the stable and unstable subspaces will "integrate" into the stable manifold, denoted \(W^{s}(x_{0})\), and unstable manifold, denoted \(W^{u}(x_{0})\), respectively.

The idea of the tangent space being split into a direct sum of a stable subspace and an unstable subspace naturally generalizes to larger invariant sets. Let \(U\subset M\) be a compact invariant set and suppose there are constants \(\lambda\in (0,1)\) and \(C > 0\) along with a splitting of the tangent space \(TM = E^{s}\oplus E^{u}\) over \(U\) such that for every \(x\in U\) we have:

- The splitting is invariant under \(F\), i.e., \[ D_{x}F(E^{s}(x))\subset E^{s}(F(x))\textrm{ and } D_{x}F(E^{u}(x))\subset E^{u}(F(x)), \]

- \(\Vert D_{x}F^{n}v\Vert \leq C\lambda^{n}\Vert v\Vert\) for all \(v\in E^{s}(x)\) and \(n\geq 0\),

- \(\Vert D_{x}F^{-n}v\Vert \leq C\lambda^{n}\Vert v\Vert\) for all \(v\in E^{u}(x)\) and \(n\geq 0\).

We then call \(U\) a hyperbolic set. In fact, the splitting is continuous in the sense that both \(E^{s}(x)\) and \(E^{u}(x)\) depend continuously on \(x\in U\). Hyperbolic fixed points are the simplest example of hyperbolic sets and just as for fixed points this invariant splitting "integrates" into invariant manifolds. This is the content of one of the most important theorems about hyperbolic sets and perhaps dynamical systems in general: the stable manifold theorem, sometimes called the stable-unstable manifold theorem.3 We state it here in terms of the local stable and unstable manifolds but its proof is well beyond the scope of a simple blog post. The interested reader can find slightly more general statements as well as their proofs in the textbooks suggested as further reading at the end of this post.

Stable manifold theorem: Let \(F\) be a \(C^{1}\)-diffeomorphism of \(M\) and let \(U\) be a hyperbolic set for \(F\). Then there exists an \(\varepsilon > 0\) such that for every \(x\in U\)

-

The sets

\[W^{s}_{\varepsilon}(x) = \{y\in M\colon d(F^{n}(x), F^{n}(y)) < \varepsilon\quad\forall n\geq 0\},\]

\[W^{u}_{\varepsilon}(x) = \{y\in M\colon d(F^{-n}(x), F^{-n}(y)) < \varepsilon\quad\forall n\geq 0\},\]

called the local stable manifold of \(x\) and the local unstable manifold of \(x\) respectively, are \(C^{1}\)-embedded balls,

-

\(T_{y}W_{\varepsilon}^{s}(x) = E^{s}(y)\) for all \(y\in W_{\varepsilon}^{s}(x)\) and \(T_{y}W_{\varepsilon}^{u}(x) = E^{u}(y)\) for all \(y\in W_{\varepsilon}^{u}(x)\),

- \(F(W_{\varepsilon}^{s}(x))\subset W_{\varepsilon}^{s}(F(x))\) and \(F^{-1}(W_{\varepsilon}^{u}(x))\subset W_{\varepsilon}^{u}(F^{-1}(x))\),

- for every \(x\in U\) the map \(F\) contracts distances along \(W_{\varepsilon}^{s}(x)\) by \(\lambda\) and \(F^{-1}\) contracts distances along \(W_{\varepsilon}^{u}(x)\) by \(\lambda\).

Furthermore, it is also possible to define the global stable and unstable manifolds of a point \(x\) in a hyperbolic set, simply denoted \(W^{s}(x)\) and \(W^{u}(x)\) and defined as follows:

\[ W^{s}(x) = \left\{y\in M\colon \lim_{n\to \infty}d(F^{n}(x), F^{n}(y)) = 0\right\}, \]

\[ W^{u}(x) = \left\{y\in M\colon \lim_{n\to\infty}d(F^{-n}(x), F^{-n}(y)) = 0\right\}. \]

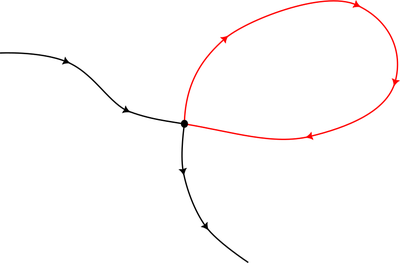

The existence of the stable and unstable manifolds on a hyperbolic set can go a long way towards getting a description of the possible dynamics of \(F\). Consider for example the concept of homoclinic connections and homoclinic intersections. Let \(x_{0}\) be a hyperbolic fixed point. A homoclinic connection is then a connected invariant manifold \(N\subset M\) such that \(N\subset W^{s}(x_{0})\cap W^{u}(x_{0})\). Imagine a dynamical system \(F\) on \(\mathbb{R}^{2}\) with a hyperbolic fixed point \(x_{0}\) having a \(1\)-dimensional stable manifold and a \(1\)-dimensional unstable manifold, both of which are curves in the plane intersecting transversally at \(x_{0}\). One can then imagine one part of the "outgoing" unstable manifold turning back in to the fixed point to become one part of the stable manifold, see the below image.

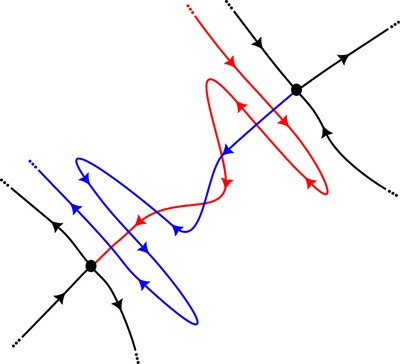

A homoclinic intersection is a different situation where the stable and unstable manifolds intersect but do not form a connection, for example if they intersect transversaly. It is then clear that if \(y\in W^{s}(x_{0})\cap W^{u}(x_{0})\) then, since both \(W^{s}(x_{0})\) and \(W^{u}(x_{0})\) are invariant, every point \(F^{n}(y)\) must also be a point of intersection between \(W^{s}(x_{0})\) and \(W^{u}(x_{0})\). This situation creates something called a homoclinic tangle, a complicated structure with complicated dynamics, illustrated in the below image.

There are also heteroclinic connections and heteroclinic intersections. Like their homoclinic counterparts they signify situations where stable and unstable manifolds overlap as a connected manifold or intersect but originate from different hyperbolic fixed points. Here's an illustration of a heteroclinic connection.

And here's an illustration of a heteroclinic intersection.

Note that the above situations are generic but simplified in the sense that the homoclinic and heteroclinic intersections are transverse. There are however also intersections which are not transverse which bring further complications.

We will return to the homoclinic and heteroclinic connections and intersections in the next part of this series: Chaos. For now, we will briefly explore another way in which hyperblic sets can influence not only the dynamics on the phase space but their mere existence can sometimes restrict the possible topology of the phase space itself.

Extreme examples of hyperbolic sets and their influence are given by the so-called Anosov diffeomorphisms. These are diffeomorphisms for which the entire phase space is a hyperbolic set. This poses some severe restrictions on both the topology of the underlying phase space and also on the dynamics of the diffeomorphism itself. For example, it is immediately clear that the two-sphere \(S^{2}\) does not admit any Anosov diffeomorphisms since that would imply a global splitting \(TS^{1} = E^{s}\oplus E^{u}\) where both \(E^{s}\) and \(E^{u}\) are one-dimensional, i.e., this would give us a parallellization of the tangent bundle of \(S^{2}\). On the other hand, the set of Anosov diffeomorphisms is an open subset of \(\textrm{Diff}^{1}(M)\), meaning that any sufficiently small perturbation of an Anosov diffeomorphism is also an Anosov diffeomorphism. Additionally, when Anosov diffeomorphisms do exist they are structurally stable, meaning that if \(F\colon M\to M\) is an Anosov diffeomorphism and \(G\in \textrm{Diff}^{1}(M)\) is close enough to \(F\) in the \(C^{1}\)-metric then we can always find a homeomorphism \(h\colon M\to M\) conjugating \(F\) and \(G\), i.e., \(F\circ h = h\circ G\), which is close to the identity map on \(M\) in the \(C^{0}\)-metric.

Measures and ergodicity

While hyperbolic sets, stable manifolds and homoclinic tangles are an important part of the analytic aspect of dynamical systems, another very important aspect of dynamics are the statistical properties. Where the analytic aspects use the language of derivatives and smooth manifolds, the statistical aspect use the lanugage of measures and integrals. As such, we need to consider phase spaces which are measure spaces. Typically we will consider probability spaces, i.e., measure spaces \(M\) having a measure \(\mu\) such that \(\mu(M) = 1\). Just as smooth dynamical systems behave well with respect to a differentiable structure we also consider measure-preserving dynamical systems which behave well with respect to a probability measure. Formally we say that a dynamical system \(F\) is measure-preserving if with respect to the probability measure \(\mu\) if

\[ \mu(F^{-1}(A)) = \mu(A) \]

for every measurable subset \(A\).4 We also say that the measure \(\mu\) is an invariant measure with respect to the dynamical system \(F\).

Given that we often start out with a dynamical system already defined on some phase space, how often is it possible to find an invariant measure? If invariant measures are an inredibly rare phenomenon one might not expect it to be very importnat. This question is answered, at least in part, by the Krylov-Bogolyubov theorem.

Krylov-Bogolyubov theorem: Let \(M\) be a compact metric space and let \(F\) be a continuous map of \(M\). Then there exists an \(F\)-invariant Borel probability measure on \(M\).

According to this theorem then, invariant measures are quite common. For instance, any continuous discrete time dynamical system defined on a compact smooth manifold will always have an invariant measure.

The Krylov-Bogolyubov theorem can actually be proven without too much difficulty, so we will go over the main points of a proof. Key to the proof is the Riesz representation theorem which for us means that5 for any positive continuous linear functional \(L\) on the space \(C(M)\), the space of continuous functions \(f\colon M\to \mathbb{R}\), there is a finite Borel measure \(\mu\) on \(M\) such that

\[ L(f) = \int_{M}fd\mu \]

for all \(f\in C(M)\). The strategy will therefore be to create a positive continuous linear functional on \(C(M)\) starting with the dynamical system \(F\) as in the assumptions of the theorem. To start with we will select a countable and dense subset of functions6 \(\mathcal{F}\subset C(M)\) and consider the time average \(S_{f}^{n}(x) = \frac{1}{n}\sum_{i=0}^{n-1}f(F^{i}(x))\) of any function \(f\in\mathcal{F}\) under the dynamical system \(F\). Now since the phase space \(M\) is compact the sequence of time averages \(S_{f}^{n}(x)\) is bounded and hence has a convergent subsequence and by countability of the set \(\mathcal{F}\) we can find a sequence \(n_{i}\to\infty\) such that the sequences of time averages \(S_{f}^{n_{i}}\) converge as \(i\to\infty\) for every \(f\in\mathcal{F}\). In fact, using this convergence of time averages for all \(f\) in a dense subset we can show that the time averages will converge for any function \(g\in C(M)\) and we use this fact to create the bounded linear functional \(L_{x}\) that maps a function to its time average at \(x\). This linear functional gives us what we need in order to apply the Riesz representation theorem and get a finite Borel measure \(\mu_{x}\) and it is not difficult to show that it will be \(F\)-invariant since the linear functional is.

This settles the question of how common measure-preserving dynamical systems are but it remains to be seen how measure-preservation can impact the dynamics. To show this we will present a theorem called the Poincaré recurrence theorem.

Poincaré recurrence theorem: Let \(F\) be a measure-preserving dynamical system of a probability space \(M\) and let \(U\subset M\) be a measurable subset. Then for almost every point \(x\in U\) there is an integer \(n\geq 1\) such that \(F^{n}(x)\in U\).

What this theorem tells us is that for any measurable subset \(U\) almost every point will return to it at some point. In fact, almost every point will return infinitely often to the subset \(U\). Another way of putting it is that the set of points in any measurable subset \(U\) that never return has measure zero.

The Poincaré recurrence theorem allows us to think of measure-preserving dynamical systems as, in some sense, making sure that the points of phase space are being very well mixed7 by the dynamics, at least within the support of the invariant measure.

Let's return for a moment to the proof of the Krylov-Bogolyubov theorem. From the way that the positive bounded linear operator is created we can see that there is a subsequence \(n_{k}\) such that

\[ \lim_{k\to\infty}\frac{1}{n_{k}}\sum_{i=0}^{n_{k}-1}f(F^{i}(x)) = \int_{M} fd\mu_{x} \]

whenever \(f\) is continuous, the phase space \(M\) is compact and metrizable and \(\mu_{x}\) is an invariant probability measure for \(F\). This begs a few questions: Can the sequence of time averages converge without taking a subsequence and if so for which points \(x\) does it do so? When is the invariant measure independent of \(x\)? The Birkhoff ergodic theorem answers the first of these questions.

Birkhoff ergodic theorem: Let \(F\) be a measure-preserving dynamical system on a probability space \((M,\mu)\) and let \(f\in L^{1}(M,\mu)\), i.e., \(f\) is an integrable function on \(M\) with respect to the probability measure \(\mu\). Then the limit

\[ \bar{f}(x) = \lim_{n\to\infty}\frac{1}{n}\sum_{i=0}^{n-1}f(F^{i}(x)) \]

exists for almost every \(x\in M\), it is \(\mu\)-integrable, \(F\)-invariant and

\[ \int_{M}\bar{f}d\mu = \int_{M}fd\mu. \]

So in general the time average of an integrable function \(f\) under a measure-preserving dynamical system does not equal the space average but rather it equals some other function \(\bar{f}\). However, the space average of \(\bar{f}\) equals the space average of \(f\). We therefore need some stronger condition to ensure that the time average of a function actually equals the space average. This is where the so-called ergodic dynamical systems come in. A measure-preserving dynamical system \(F\) on a probability space \((M,\mu)\) is called ergodic with respect to \(\mu\) if8 for for every \(F\)-invariant subset \(U\) we have either \(\mu(U) = 0\) or \(\mu(U) = 1\). The first step to seeing how this can answer our question of when the time average of a function equals the space average is to recognize that this definition is equivalent to another characterization in terms of \(F\)-invariant measurable functions. Namely, \(F\) is ergodic with respect to \(\mu\) if and only if any \(F\)-invariant measurable function \(f\) is constant outside a set of measure zero. Armed with this new knowledge and combining it with the Birkhoff ergodic theorem we get the following answer to the second question above.

Theorem: A measure-preserving dynamical system \(F\) on a probability space \((M,\mu)\) is ergodic if and only if the time average at \(x\) of any integrable function \(f\in L^{1}(M,\mu)\) equals the space average of \(f\) for almost every \(x\), i.e., if and only if

\[ \lim_{n\to\infty}\frac{1}{n}\sum_{i=0}^{n-1}f(F^{i}(x)) = \int_{M}fd\mu \]

for almost every \(x\).

Multiplicative ergodic theorem and Lyapunov exponents

Finally, we will use another of the ergodic theorems, the so-called Oseledec multiplicative ergodic theorem, to reconnect with the hyperbolic dynamics from earlier. First we will need some definitions.

Let \(F\) be a differentiable map of a smooth manifold \(M\). The upper Lyapunov exponent of \((x, v)\) with respect to \(F\) is defined as

\[ \chi(x, v) = \limsup_{n\to\infty}\frac{1}{n}\log\lVert D_{x}F^{n}v\rVert \]

where \(x\in M\) and \(v\in T_{x}M\). Sometimes this is also called just the Lyapunov exponent, especially if the actual limit exists and not just the limit superior. Thus the Lyapunov exponent measures the exponential expansion rate of tangent vectors along orbits in the sense that

\[ \lVert D_{x}F^{n}v\rVert \approx e^{\chi(x, v)}\lVert v\rVert. \]

It is clear that if \(F\) has uniformly bounded first derivative, for example if \(M\) is compact, then the Lyapunov exponent always exists for every \(x\in M\) and every \(v\in T_{x}M\). Some things that are not clear are for example when the Lyapunov exponent exists in general and how it depends on \(x\). One could imagine, for example, that if \(F\) was ergodic that the Lyapunov exponent would not depend on \(x\) outside a subset of measure zero due to the way points \(x\) seem to move around all over the support of the measure. This intuition will lead us on the right track to answering these questions but first we will take a detour into linear cocycles and another ergodic theorem.

Suppose we have a measure-preserving dynamical system \(F\) defined on a probability space \((M,\mu)\) which is also a Lebesgue space9. A measurable linear cocycle over \(F\), or simply a linear cocycle, is a measurable map \(A\colon M\times\mathbb{Z}\to GL(n, \mathbb{R})\) satisfying

\[ A(x, m+n) = A(F^{n}(x), m)A(x, n) \]

for all \(m,n\in\mathbb{Z}\).

One of the main examples of how cocycles arise in dynamical systems, and the one we will be the most interested in here, is if we're starting out with a measure-preserving dynamical system that also happens to be a diffeomorphism. We can then consider the derivative \(DF\) as a cocycle by choosing10

\[ A(x, m) = D_{x}F^{m} = D_{F^{m-1}(x)}F\cdot D_{F^{m-2}(x)}F\cdot\ldots\cdot D_{x}F. \]

Keeping this example in mind we define the upper Lyapunov exponent of A at \((x, v)\) as

\[ \limsup_{n\to\infty}\frac{1}{n}\log\lVert A(x, n)v \rVert. \]

We can see that this definition reduces to the previous definition of the Lyapunov exponent if the cocycle \(A\) is given as the composition of the differential \(DF\) of the map \(F\).

One can show that for any real number \(C\) the set \(V_{C}(x) = \{v\in \mathbb{R}^{n}\colon \chi(x, v)\leq C\}\) forms a linear subspace of \(\mathbb{R}^{n}\) and these subspaces are nested as we increase the constant, i.e., if \(\chi_{1}\leq C_{2}\) we have \(V_{C_{1}}(x)\subset V_{C_{2}}(x)\). In fact, for each \(x\) it is possible to find a sequence of real numbers \(\chi_{1} < \chi_{2} < \ldots < \chi_{n(x)}\) and associated subspaces

\[ \{0\}\subset V_{\chi_{1}}(x)\subset V_{\chi_{2}}(x)\subset\ldots\subset V_{\chi_{n(x)}}(x) = \mathbb{R}^{n} \]

such that if \(v\in E_{\chi_{i}}(x)\setminus E_{\chi_{i-1}}(x)\) then the upper Lyapunov exponent of \((x,v)\) equals \(\chi_{i}\). With this in mind we call the numbers \(\chi_{i}\) the upper Lyapunov exponents of \(A\) at \(x\). We define the multiplicity \(d_{i}\) of the Lyapunov exponent \(\chi_{i}\) as the number \(d_{i} = \dim E_{\chi_{i}}(x) - \dim E_{\chi_{i-1}}\). The Lyapunov exponents of \(A\) together with their multiplicities is called the Lyapunov spectrum of \(A\) at \(x\).

With the preliminaries out of the way we can now state the Oseledec multiplicative ergodic theorem which, like the Birkhoff ergodic theorem, will tell us about when the Lyapunov exponents (essentially the time averages in this situation) exist and when they are independent of the initial point \(x\).

Oseledec multiplicative ergodic theorem: Let \(F\) be a measure-preserving dynamical system on a probability space \((M,\mu)\) which is also a Lebesgue space and let \(A\) be a measurable linear cocycle over \(F\) satisfying that both the maps \(x\mapsto \log^{+} \lVert A(x, 1)\rVert\) and \(x\mapsto \log^{+} \lVert A(x, -1)\rVert\), where \(\log^{+}(x) = \max\{0, \log(x)\}\), are both \(L^{1}\)-integrable with respect to \(\mu\). Then there exists a subset \(U\subset M\) of full measure such that for each \(x\in U\) we have the following:

-

There exists a decomposition of \(\mathbb{R}^{n}\) by subspaces \(E_{i}(x)\) for \(i = 1,\ldots, n(x)\), i.e.,

\[ \mathbb{R}^{n} = \bigoplus_{i = 1}^{n(x)}E_{i}(x) \]

which is invariant under the induced map of \(M\times \mathbb{R}^{n}\) given by \((x,v)\mapsto (F(x), A(x, 1)v)\).

-

The Lyapunov exponents \(\chi_{1}(x) < \chi_{2}(x) < \ldots < \chi_{n(x)}(x)\) associated with each \(E_{i}(x)\) all exist and are \(F\)-invariant.

-

For all non-zero vectors \(v\in E_{i}(x)\) we have \[ \lim_{n\to\pm\infty}\frac{1}{m}\log\frac{\lVert A(x, n)v\rVert}{\lVert v\rVert} = \pm\chi_{i}(x) \] where convergence is uniform in \(v\).

Furthermore, if \(F\) is ergodic with respect to \(\mu\) then the Lyapunov exponents are independent of \(x\).

Now let's go back to the cocycle given by iteration of the differential of \(F\) considered above. For simplicity, let's assume that \(M\) is a compact smooth manifold and that \(F\) is a diffeomorphism of \(M\) which is hyperbolic. Consider a vector \(v\) in the stable subspace \(E^{s}(x)\subset T_{x}M\) where \(x\) is in some hyperbolic set \(U\) for \(F\). We then know from hyperbolicity that there is some constant \(C > 0\) and some constant \(0 < \lambda < 1\) such that

\[ \lVert D_{x}F^{n}v\rVert \leq C\lambda^{n}\lVert v\rVert \]

for all \(n\geq 0\). On the other hand, let's consider the Lyapunov exponent:

\[ \chi(x, v) = \limsup_{n\to\infty}\frac{1}{n}\log\lVert D_{x}F^{n}v\rVert \leq \limsup_{n\to\infty}\frac{1}{n}\log\lVert C\lambda^{n}v \rVert = \log(\lambda) \]

It is clear from this that the Lyapunov exponent is related to the hyperbolicity of \(F\). Picking an invariant measure11 on \(U\) we can also use the multiplicative ergodic theorem to guarantee us the existence of all the Lyapunov exponents. These can then be seen as a further refinement of the constant \(\lambda\) giving the local rate of contraction and expansion in the stable and unstable manifolds.

Given that the Lyapunov exponents are related to ideas of contraction and expansion but having a wider range of applicability than just hyperbolicity they have found frequent use in generalizations of hyperbolicity, such as nonuniform hyperbolicity and partial hyperbolicity. They also feature prominently in the theory surrounding chaotic dynamical systems, which we will explore in the next part of this series.

Further Reading

Here are some links to free online resources for those that are interested in reading more about the topics covered in this blog post.

- Dynamical systems

- Hyperbolic sets

- Invariant manifold

- Poincaré recurrence theorem

- Ergodic theory

- Lyapunov exponents

Here are also some recommended textbooks for those wishing to learn dynamical systems.

-

An Introduction to Chaotic Dynamical Systems by Robert L. Devaney

A fantastic introduction to dynamical systems, suitable at an undergraduate level. Presents many interesting topics of dynamics, including chaos and complex dynamics, while also focusing on one, two and sometimes three dimensional systems in order to keep the presentation simple and intuitive. Also includes several pictures of the phenomena being discussed that also helps understanding.

-

Introduction to Dynamical Systems by Michael Brin and Garrett Stuck

Another good introductory textbook on dynamical systems offering a concise presentation of a very well chosen set of topics. More advanced textbook that doesn't shy away from presenting results in much more generality, including using \(n\)-dimensional manifolds as phase spaces, hence more suited for the graduate level.

-

Introduction to the Modern Theory of Dynamical Systems by Anatole Katok and Boris Hasselblatt

Gradute level textbook covering many topics of dynamical systems, from the basic definitions and central results, to more specialized or advanced topics not usually appearing in a general textbook, such as Aubrey-Mather sets and geodesic flows. Definitely requires more mathematical maturity than the previous two. Also unlike the previous two books it also covers a good deal continuous time dynamics. This is pretty much the endgame as far as books on general dynamical systems go. To continue from here requires diving into more specialized advanced textbooks.

-

Undergraduate level textbook focusing on systems of ordinary differential equations and continuous time dynamical systems. Provides a very good introductin to the fundamentals of both ordinary differential equations and continuous time dynamical systems. Has a large amount of very good examples, illustrations, exercises and applications.

-

For a continuous time dynamical system a fixed point would be a point \(x\) such that \(\Phi(t, x) = x\) for all \(t\). ↩

-

Note that for a periodic point the orbit \(\{F^{i}(x_{0})\colon i\geq 0\}\) is finite since it repeats starting with \(F^{n}(x_{0}) = x_{0}\). ↩

-

It can be considered a special case of the Hadamard-Perron theorem but stating it in such generality quickly becomes unwieldy for a small blog post. Consult a proper textbook on dynamical systems to enjoy the full statement of this important theorem. ↩

-

Another way of stating this is in terms of the pushforward measure: \(f_{*}(\mu) = \mu\). ↩

-

The full statement, which is more general, is about Hilbert spaces, see Riesz representation theorem. ↩

-

This can be done since by the Stone-Weierstrass theorem the space \(C(M)\) is separable and hence it has a countable dense subset. ↩

-

In fact, certaing measure-preserving dynamical systems also have a stronger property called mixing. We will not discuss this here. ↩

-

It is also common to say the \(\mu\) is ergodic with respect to \(F\). ↩

-

A Lebesgue space is a measure space with finite measure which is isomorphic to the a union of the Lebesgue measure on an interval \([0,a]\) with at most countably many points of positive measure. ↩

-

We are ignoring some technical details here, like choosing local trivializations on \(M\), for the sake of readability. ↩

-

It turns out that non-trivial, i.e., not just a periodic orbit, hyperbolic sets have many invariant measures. In particular, for each periodic orbit in the hyperbolic set we have an atomic invariant measure supported on that periodic orbit and since there are infinitely many periodic orbits we also have infinitely many invariant measures. Additional invariant measures can then be created as the weak limit of these measures. ↩